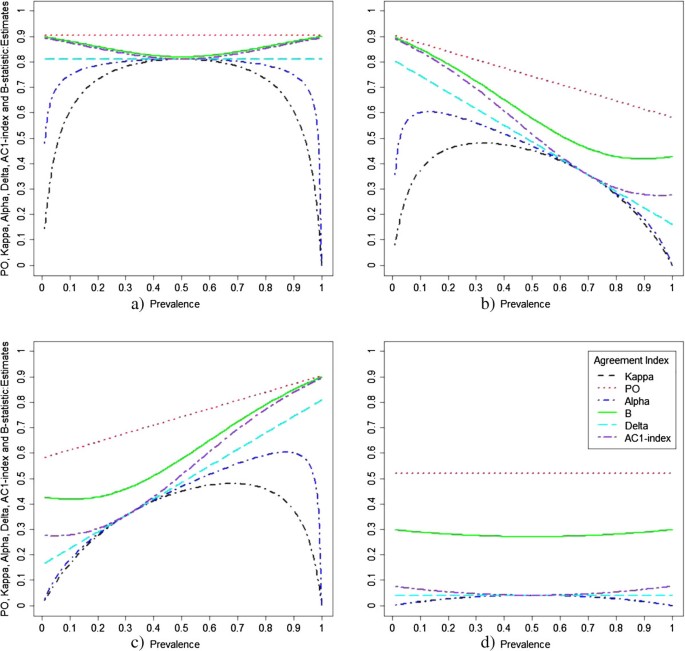

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters

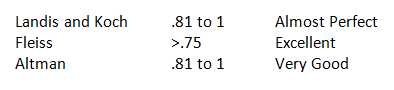

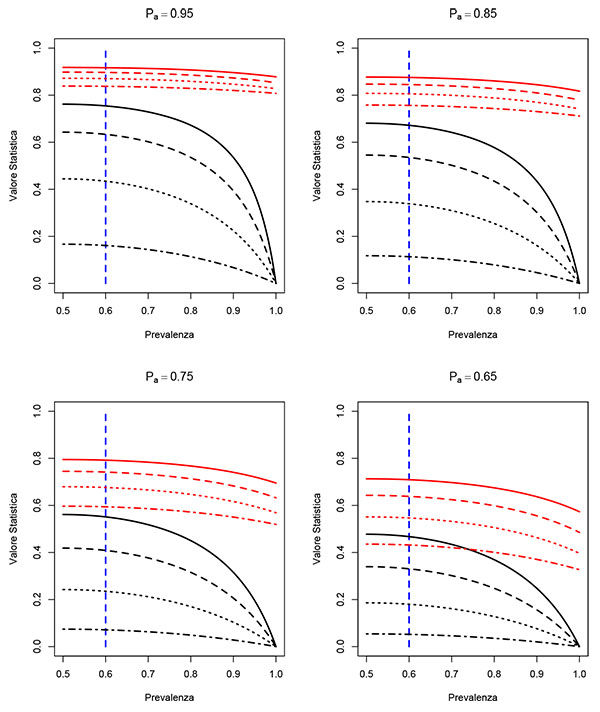

![PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/79de97d630ca1ed5b1b529d107b8bb005b2a066b/1-Figure1-1.png)

PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar

Beyond kappa: an informational index for diagnostic agreement in dichotomous and multivalue ordered-categorical ratings | SpringerLink

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters

Systematic literature reviews in software engineering—enhancement of the study selection process using Cohen's Kappa statistic - ScienceDirect

![PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/e45dcfc0a65096bdc5b19d00e4243df089b19579/3-Table2-1.png)